Traffic Light Classifier¶

Note: This is final project submitted to Udacity as is, so the sentences in 2nd person are from Udacity. Others are just me trying to answer and complete the project step by step as we go.

In this project, you’ll use your knowledge of computer vision techniques to build a classifier for images of traffic lights! You'll be given a dataset of traffic light images in which one of three lights is illuminated: red, yellow, or green.

In this notebook, you'll pre-process these images, extract features that will help us distinguish the different types of images, and use those features to classify the traffic light images into three classes: red, yellow, or green. The tasks will be broken down into a few sections:

Loading and visualizing the data. The first step in any classification task is to be familiar with your data; you'll need to load in the images of traffic lights and visualize them!

Pre-processing. The input images and output labels need to be standardized. This way, you can analyze all the input images using the same classification pipeline, and you know what output to expect when you eventually classify a new image.

Feature extraction. Next, you'll extract some features from each image that will help distinguish and eventually classify these images.

Classification and visualizing error. Finally, you'll write one function that uses your features to classify any traffic light image. This function will take in an image and output a label. You'll also be given code to determine the accuracy of your classification model.

Evaluate your model. To pass this project, your classifier must be >90% accurate and never classify any red lights as green; it's likely that you'll need to improve the accuracy of your classifier by changing existing features or adding new features. I'd also encourage you to try to get as close to 100% accuracy as possible!

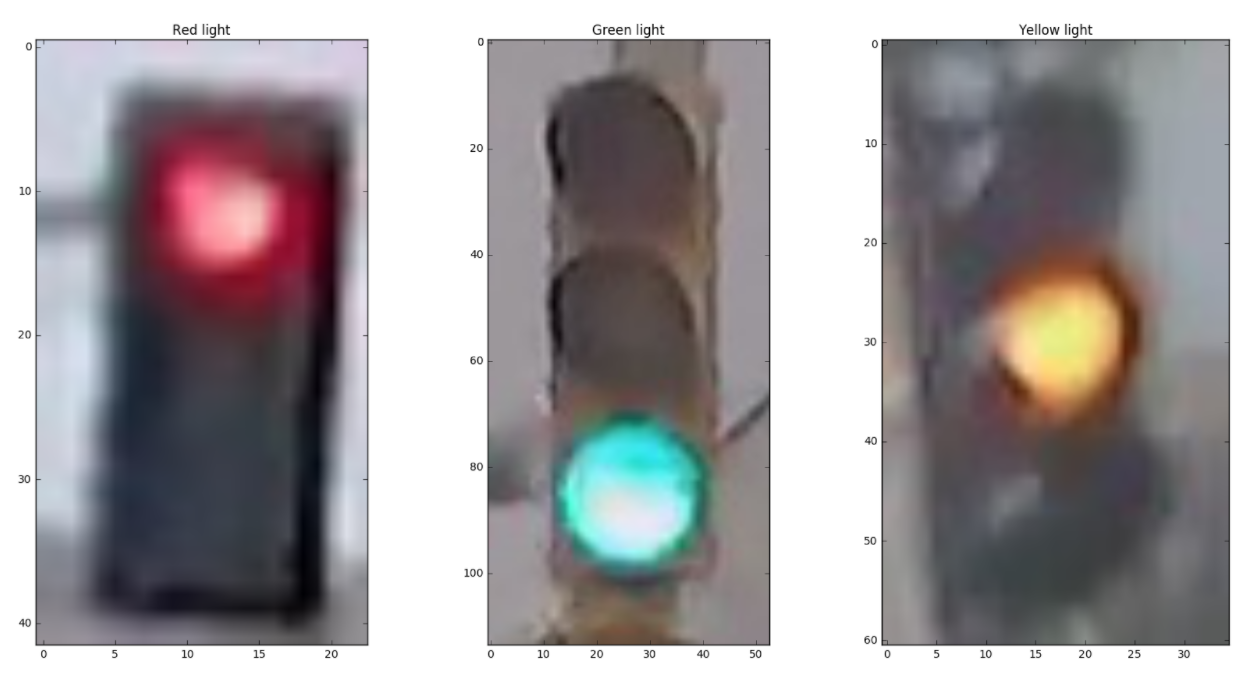

Here are some sample images from the dataset (from left to right: red, green, and yellow traffic lights):

</img?>

</img?>

Here's what you need to know to complete the project:¶

Some template code has already been provided for you, but you'll need to implement additional code steps to successfully complete this project. Any code that is required to pass this project is marked with '(IMPLEMENTATION)' in the header. There are also a couple of questions about your thoughts as you work through this project, which are marked with '(QUESTION)' in the header. Make sure to answer all questions and to check your work against the project rubric to make sure you complete the necessary classification steps!

Your project submission will be evaluated based on the code implementations you provide, and on two main classification criteria. Your complete traffic light classifier should have:

- Greater than 90% accuracy

- Never classify red lights as green

1. Loading and Visualizing the Traffic Light Dataset¶

This traffic light dataset consists of 1484 number of color images in 3 categories - red, yellow, and green. As with most human-sourced data, the data is not evenly distributed among the types. There are:

- 904 red traffic light images

- 536 green traffic light images

- 44 yellow traffic light images

Note: All images come from this MIT self-driving car course and are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Import resources¶

Before you get started on the project code, import the libraries and resources that you'll need.

import cv2 # computer vision library

import helpers # helper functions

import random

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpimg # for loading in images

%matplotlib inline

Training and Testing Data¶

All 1484 of the traffic light images are separated into training and testing datasets.

- 80% of these images are training images, for you to use as you create a classifier.

- 20% are test images, which will be used to test the accuracy of your classifier.

- All images are pictures of 3-light traffic lights with one light illuminated.

Define the image directories¶

First, we set some variables to keep track of some where our images are stored:

IMAGE_DIR_TRAINING: the directory where our training image data is stored

IMAGE_DIR_TEST: the directory where our test image data is stored# Image data directories

IMAGE_DIR_TRAINING = "traffic_light_images/training/"

IMAGE_DIR_TEST = "traffic_light_images/test/"

Load the datasets¶

These first few lines of code will load the training traffic light images and store all of them in a variable, IMAGE_LIST. This list contains the images and their associated label ("red", "yellow", "green").

You are encouraged to take a look at the load_dataset function in the helpers.py file. This will give you a good idea about how lots of image files can be read in from a directory using the glob library. The load_dataset function takes in the name of an image directory and returns a list of images and their associated labels.

For example, the first image-label pair in IMAGE_LIST can be accessed by index:

IMAGE_LIST[0][:].

# Using the load_dataset function in helpers.py

# Load training data

IMAGE_LIST = helpers.load_dataset(IMAGE_DIR_TRAINING)

Visualize the Data¶

The first steps in analyzing any dataset are to 1. load the data and 2. look at the data. Seeing what it looks like will give you an idea of what to look for in the images, what kind of noise or inconsistencies you have to deal with, and so on. This will help you understand the image dataset, and understanding a dataset is part of making predictions about the data.

Visualize the input images¶

Visualize and explore the image data! Write code to display an image in IMAGE_LIST:

- Display the image

- Print out the shape of the image

- Print out its corresponding label

See if you can display at least one of each type of traffic light image – red, green, and yellow — and look at their similarities and differences.

# The first image in IMAGE_LIST is displayed below (without information about shape or label)

selected_image = IMAGE_LIST[0][0]

plt.imshow(selected_image)

## TODO: Write code to display an image in IMAGE_LIST (try finding a yellow traffic light!)

## TODO: Print out 1. The shape of the image and 2. The image's label

# ANSWER: Below I have created 3 sub plots, and plotted each image type, along with type as Title.

f, (ax1, ax2, ax3) = plt.subplots(1, 3, figsize=(20,10))

# red image

num = 0 # 0 to 722 is red

red_image = IMAGE_LIST[num][0]

red_label = IMAGE_LIST[num][1]

ax1.set_title(red_label + ' light')

ax1.imshow(red_image)

# yellow image

num = 723 # 723 to 757 is yellow

yellow_image = IMAGE_LIST[num][0]

yellow_label = IMAGE_LIST[num][1]

ax2.set_title(yellow_label + ' light')

ax2.imshow(yellow_image)

# green image

num = 758 # 758 to 1187 is green

green_image = IMAGE_LIST[num][0]

green_label = IMAGE_LIST[num][1]

ax3.set_title(green_label + ' light')

ax3.imshow(green_image)

# download images for working in local PC

#!tar chvfz notebook.tar.gz *

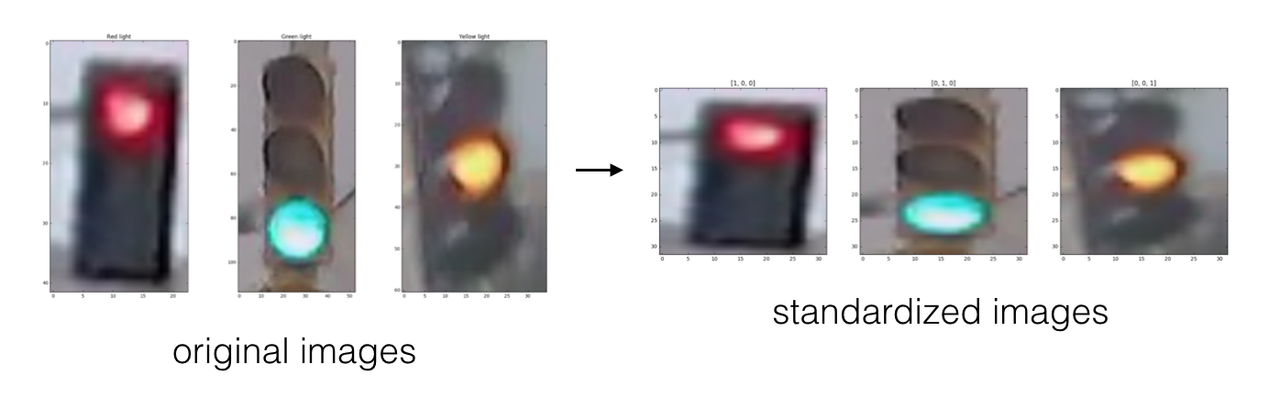

2. Pre-process the Data¶

After loading in each image, you have to standardize the input and output!

Input¶

This means that every input image should be in the same format, of the same size, and so on. We'll be creating features by performing the same analysis on every picture, and for a classification task like this, it's important that similar images create similar features!

Output¶

We also need the output to be a label that is easy to read and easy to compare with other labels. It is good practice to convert categorical data like "red" and "green" to numerical data.

A very common classification output is a 1D list that is the length of the number of classes - three in the case of red, yellow, and green lights - with the values 0 or 1 indicating which class a certain image is. For example, since we have three classes (red, yellow, and green), we can make a list with the order: [red value, yellow value, green value]. In general, order does not matter, we choose the order [red value, yellow value, green value] in this case to reflect the position of each light in descending vertical order.

A red light should have the label: [1, 0, 0]. Yellow should be: [0, 1, 0]. Green should be: [0, 0, 1]. These labels are called one-hot encoded labels.

(Note: one-hot encoding will be especially important when you work with machine learning algorithms).

(IMPLEMENTATION): Standardize the input images¶

- Resize each image to the desired input size: 32x32px.

- (Optional) You may choose to crop, shift, or rotate the images in this step as well.

It's very common to have square input sizes that can be rotated (and remain the same size), and analyzed in smaller, square patches. It's also important to make all your images the same size so that they can be sent through the same pipeline of classification steps!

# This function should take in an RGB image and return a new, standardized version

def standardize_input(image):

## TODO: Resize image and pre-process so that all "standard" images are the same size

standard_im = np.copy(image)

standard_im = cv2.resize(image, (32,32))

return standard_im

Standardize the output¶

With each loaded image, we also specify the expected output. For this, we use one-hot encoding.

- One-hot encode the labels. To do this, create an array of zeros representing each class of traffic light (red, yellow, green), and set the index of the expected class number to 1.

Since we have three classes (red, yellow, and green), we have imposed an order of: [red value, yellow value, green value]. To one-hot encode, say, a yellow light, we would first initialize an array to [0, 0, 0] and change the middle value (the yellow value) to 1: [0, 1, 0].

## TODO: One hot encode an image label

## Given a label - "red", "green", or "yellow" - return a one-hot encoded label

# Examples:

# one_hot_encode("red") should return: [1, 0, 0]

# one_hot_encode("yellow") should return: [0, 1, 0]

# one_hot_encode("green") should return: [0, 0, 1]

def one_hot_encode(label):

## TODO: Create a one-hot encoded label that works for all classes of traffic lights

one_hot_encoded = [0,0,0]

if label is 'red':

one_hot_encoded[0] = 1

elif label is 'yellow':

one_hot_encoded[1] = 1

else: #green

one_hot_encoded[2] = 1

return one_hot_encoded

Testing as you Code¶

After programming a function like this, it's a good idea to test it, and see if it produces the expected output. In general, it's good practice to test code in small, functional pieces, after you write it. This way, you can make sure that your code is correct as you continue to build a classifier, and you can identify any errors early on so that they don't compound.

All test code can be found in the file test_functions.py. You are encouraged to look through that code and add your own testing code if you find it useful!

One test function you'll find is: test_one_hot(self, one_hot_function) which takes in one argument, a one_hot_encode function, and tests its functionality. If your one_hot_label code does not work as expected, this test will print ot an error message that will tell you a bit about why your code failed. Once your code works, this should print out TEST PASSED.

# Importing the tests

import test_functions

tests = test_functions.Tests()

# Test for one_hot_encode function

tests.test_one_hot(one_hot_encode)

Construct a STANDARDIZED_LIST of input images and output labels.¶

This function takes in a list of image-label pairs and outputs a standardized list of resized images and one-hot encoded labels.

This uses the functions you defined above to standardize the input and output, so those functions must be complete for this standardization to work!

def standardize(image_list):

# Empty image data array

standard_list = []

# Iterate through all the image-label pairs

for item in image_list:

image = item[0]

label = item[1]

# Standardize the image

standardized_im = standardize_input(image)

# One-hot encode the label

one_hot_label = one_hot_encode(label)

# Append the image, and it's one hot encoded label to the full, processed list of image data

standard_list.append((standardized_im, one_hot_label))

return standard_list

# Standardize all training images

STANDARDIZED_LIST = standardize(IMAGE_LIST)

Visualize the standardized data¶

Display a standardized image from STANDARDIZED_LIST and compare it with a non-standardized image from IMAGE_LIST. Note that their sizes and appearance are different!

## TODO: Display a standardized image and its label

f, (ax1, ax2) = plt.subplots(1, 2, figsize=(20,10))

# non standardized image

num = 23

red_image = IMAGE_LIST[num][0]

red_label = 'red'

ax1.set_title(red_label + ' light')

ax1.imshow(red_image)

# standardized image

red_image = STANDARDIZED_LIST[num][0]

red_label = 'red'

ax2.set_title(red_label + ' light')

ax2.imshow(red_image)

3. Feature Extraction¶

You'll be using what you now about color spaces, shape analysis, and feature construction to create features that help distinguish and classify the three types of traffic light images.

You'll be tasked with creating one feature at a minimum (with the option to create more). The required feature is a brightness feature using HSV color space:

A brightness feature.

- Using HSV color space, create a feature that helps you identify the 3 different classes of traffic light.

- You'll be asked some questions about what methods you tried to locate this traffic light, so, as you progress through this notebook, always be thinking about your approach: what works and what doesn't?

(Optional): Create more features!

Any more features that you create are up to you and should improve the accuracy of your traffic light classification algorithm! One thing to note is that, to pass this project you must never classify a red light as a green light because this creates a serious safety risk for a self-driving car. To avoid this misclassification, you might consider adding another feature that specifically distinguishes between red and green lights.

These features will be combined near the end of his notebook to form a complete classification algorithm.

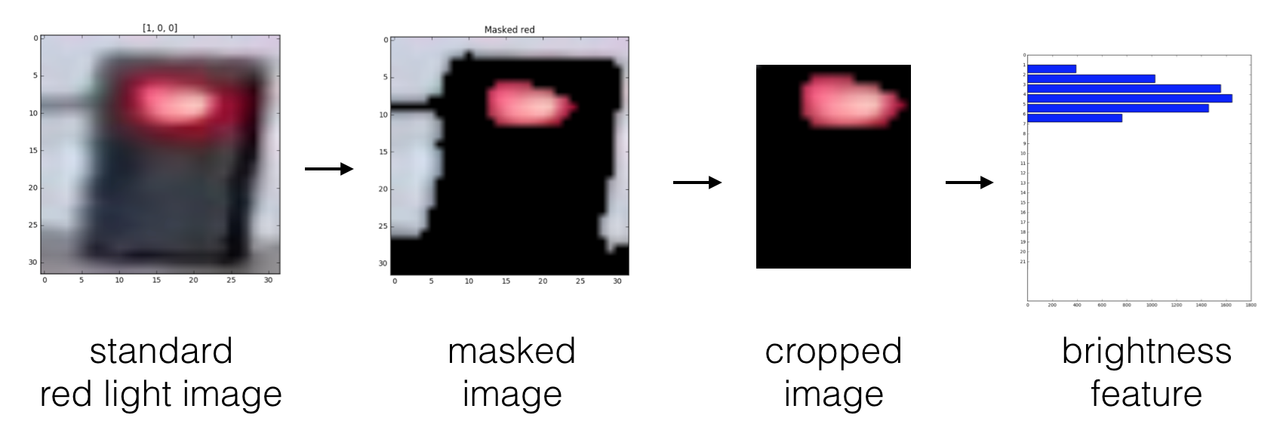

Creating a brightness feature¶

There are a number of ways to create a brightness feature that will help you characterize images of traffic lights, and it will be up to you to decide on the best procedure to complete this step. You should visualize and test your code as you go.

Pictured below is a sample pipeline for creating a brightness feature (from left to right: standardized image, HSV color-masked image, cropped image, brightness feature):

RGB to HSV conversion¶

Below, a test image is converted from RGB to HSV colorspace and each component is displayed in an image.

# Convert and image to HSV colorspace

# Visualize the individual color channels

image_num = 0

test_im = STANDARDIZED_LIST[image_num][0]

test_label = STANDARDIZED_LIST[image_num][1]

# Convert to HSV

hsv = cv2.cvtColor(test_im, cv2.COLOR_RGB2HSV)

# Print image label

print('Label [red, yellow, green]: ' + str(test_label))

# HSV channels

h = hsv[:,:,0]

s = hsv[:,:,1]

v = hsv[:,:,2]

# Plot the original image and the three channels

f, (ax1, ax2, ax3, ax4) = plt.subplots(1, 4, figsize=(20,10))

ax1.set_title('Standardized image')

ax1.imshow(test_im)

ax2.set_title('H channel')

ax2.imshow(h, cmap='gray')

ax3.set_title('S channel')

ax3.imshow(s, cmap='gray')

ax4.set_title('V channel')

ax4.imshow(v, cmap='gray')

(IMPLEMENTATION): Create a brightness feature that uses HSV color space¶

Write a function that takes in an RGB image and returns a 1D feature vector and/or single value that will help classify an image of a traffic light. The only requirement is that this function should apply an HSV colorspace transformation, the rest is up to you.

From this feature, you should be able to estimate an image's label and classify it as either a red, green, or yellow traffic light. You may also define helper functions if they simplify your code.

## DEPENDENT SNIPPETS

def getMaskedImage(image, label):

"""

Returns the masked image as per label specified.

NOTE INCOMING IMAGE IS RGB (if you used cv.imread,it would be BGR. I used matplotlib imread)

"""

# first convert to HSV

image_hsv = cv2.cvtColor(image, cv2.COLOR_RGB2HSV)

if label is 'red':

red_mask_hsv1 = cv2.inRange(image_hsv, (0,30,50), (10,255,255))

red_mask_hsv2 = cv2.inRange(image_hsv, (150,40,50), (180,255,255))

mask_hsv = cv2.bitwise_or(red_mask_hsv1,red_mask_hsv2)

elif label is 'yellow':

mask_hsv = cv2.inRange(image_hsv, (10,10,110),(31,255,255))

else: # green

mask_hsv = cv2.inRange(image_hsv,(43,40,120),(95,255,255))

# standard mask operations to extract out specified label color

mask_hsv = cv2.bitwise_not(mask_hsv) # invert the mask

masked_image = np.copy(image)

masked_image[mask_hsv != 0] = [0, 0, 0]

return masked_image

def getHSVSum(image):

"""

Input: RGB image (ensure, its not BGR)

Output: HSV sums individually

"""

# first convert to HSV

image_hsv = cv2.cvtColor(image, cv2.COLOR_RGB2HSV)

# HSV channels

h = image_hsv[:,:,0]

s = image_hsv[:,:,1]

v = image_hsv[:,:,2]

h_sum = np.sum(h[:,:],axis=1)

s_sum = np.sum(s[:,:],axis=1)

v_sum = np.sum(v[:,:],axis=1)

return (h_sum, s_sum, v_sum)

## TODO: Create a brightness feature that takes in an RGB image and outputs a feature vector and/or value

## This feature should use HSV colorspace values

def create_feature(rgb_image):

# crop the images first

image = rgb_image[2:-2, 7:-7, :]

# image dimensions

(width,height,_) = image.shape

area = width*height

# apply all masks and get results

r_mask = getMaskedImage(image,'red')

y_mask = getMaskedImage(image,'yellow')

g_mask = getMaskedImage(image,'green')

# get hsv_sum graphs

r_hsv_sum = getHSVSum(r_mask)

y_hsv_sum = getHSVSum(y_mask)

g_hsv_sum = getHSVSum(g_mask)

# get v channel (brightness)

r_hsv_sum_v = r_hsv_sum[2]

y_hsv_sum_v = y_hsv_sum[2]

g_hsv_sum_v = g_hsv_sum[2]

# get associated metrics for each v channel

# 1. average brightness normalized across area

r_hsv_sum_v_avg = round(np.sum(r_hsv_sum_v)/area,1)

y_hsv_sum_v_avg = round(np.sum(y_hsv_sum_v)/area,1)

g_hsv_sum_v_avg = round(np.sum(g_hsv_sum_v)/area,1)

# 2. max brightness (both x and y) normalized across area.

#'x' denotes location across height of image

r_hsv_sum_v_max_x = np.argmax(r_hsv_sum_v)

y_hsv_sum_v_max_x = np.argmax(y_hsv_sum_v)

g_hsv_sum_v_max_x = np.argmax(g_hsv_sum_v)

r_hsv_sum_v_max_y = round(r_hsv_sum_v[r_hsv_sum_v_max_x]/width,1)

y_hsv_sum_v_max_y = round(y_hsv_sum_v[y_hsv_sum_v_max_x]/width,1)

g_hsv_sum_v_max_y = round(g_hsv_sum_v[g_hsv_sum_v_max_x]/width,1)

## FEATURE CLASSIFICATION. returns ['red'] or ['yellow'] or ['green']

feature = [0,0,0] # one hot encoding in order of red, yellow, green

# 1. choose whick masked image has highest max

max_max_y = np.argmax([r_hsv_sum_v_max_y, y_hsv_sum_v_max_y, g_hsv_sum_v_max_y])

max_avg = np.argmax([r_hsv_sum_v_avg, y_hsv_sum_v_avg, g_hsv_sum_v_avg])

if (max_max_y == 0) and (max_avg == 0): # red

feature[0] = 1

elif (max_max_y == 1) and (max_avg == 1): # yellow

feature[1] = 1

elif (max_max_y == 2) and (max_avg == 2): # green

feature[2] = 1

# UPDATE 1

if (g_hsv_sum_v_avg < r_hsv_sum_v_avg) and (g_hsv_sum_v_max_y > r_hsv_sum_v_max_y) and (g_hsv_sum_v_max_x > 20):

feature[2] = 1

#print(list(feature))

return feature

(Optional) Create more features to help accurately label the traffic light images¶

# (Optional) Add more image analysis and create more features

(QUESTION 1): How do the features you made help you distinguish between the 3 classes of traffic light images?¶

Answer:

Challenge 1: Visualization

I tried to visualize all the images in various ways, and that took a long time to grasp various aspects of the image. I plotted for all images initially, HSV and RGB graphs, but each execution was too time consuming (Here is an SO question I created, to reduce time, but no solution). So I tried to visualize randomly only few images and its metrics at a time. This approach helped to speed up the progress.

Challenge 2: Masking

It took a lot of time to figure out good enough ranges for masking. openCV uses a different standard than normal HSV ranges (0-180, 0-255,0-255), so I even created a color picker, to get openCV values during manual sampling of images for colors. Till end of flow, few tweaks on masks were needed.

Challenge 3: Feature Extraction

Initially I plotted HSV for one image at a time randomly. This did not give enough overview. So I created a huge array of sub plots, plotting all HSV values for all masks of each randomly selected image of each label type. This facilitated figuring out suitable brightness ranges and location of peaks for feature extraction. Below is how it is generated. Creating this snippet was toughest but worth it.

It takes about 30 to 40 seconds to execute below code, so please wait. Also, every time,random 10 images from each label are selected, so you could also try running multiple times if time permits

%matplotlib inline

from visualizer_parthi2929 import visualize

from IPython.display import HTML

import time

start_time = time.time()

anim = visualize(STANDARDIZED_LIST)

HTML(anim.to_html5_video()) # click on slider if not auto playing..

elapsed_time = time.time() - start_time

print('Elapsed time for above animation: {}'.format(elapsed_time))

4. Classification and Visualizing Error¶

Using all of your features, write a function that takes in an RGB image and, using your extracted features, outputs whether a light is red, green or yellow as a one-hot encoded label. This classification function should be able to classify any image of a traffic light!

You are encouraged to write any helper functions or visualization code that you may need, but for testing the accuracy, make sure that this estimate_label function returns a one-hot encoded label.

# This function should take in RGB image input

# Analyze that image using your feature creation code and output a one-hot encoded label

def estimate_label(rgb_image):

## TODO: Extract feature(s) from the RGB image and use those features to

## classify the image and output a one-hot encoded label

predicted_label = create_feature(rgb_image)

return predicted_label

Testing the classifier¶

Here is where we test your classification algorithm using our test set of data that we set aside at the beginning of the notebook! This project will be complete once you've pogrammed a "good" classifier.

A "good" classifier in this case should meet the following criteria (and once it does, feel free to submit your project):

- Get above 90% classification accuracy.

- Never classify a red light as a green light.

Test dataset¶

Below, we load in the test dataset, standardize it using the standardize function you defined above, and then shuffle it; this ensures that order will not play a role in testing accuracy.

# Using the load_dataset function in helpers.py

# Load test data

TEST_IMAGE_LIST = helpers.load_dataset(IMAGE_DIR_TEST)

# Standardize the test data

STANDARDIZED_TEST_LIST = standardize(TEST_IMAGE_LIST)

# Shuffle the standardized test data

random.shuffle(STANDARDIZED_TEST_LIST)

Determine the Accuracy¶

Compare the output of your classification algorithm (a.k.a. your "model") with the true labels and determine the accuracy.

This code stores all the misclassified images, their predicted labels, and their true labels, in a list called MISCLASSIFIED. This code is used for testing and should not be changed.

# Constructs a list of misclassified images given a list of test images and their labels

# This will throw an AssertionError if labels are not standardized (one-hot encoded)

def get_misclassified_images(test_images):

# Track misclassified images by placing them into a list

misclassified_images_labels = []

# Iterate through all the test images

# Classify each image and compare to the true label

for image in test_images:

# Get true data

im = image[0]

true_label = image[1]

assert(len(true_label) == 3), "The true_label is not the expected length (3)."

# Get predicted label from your classifier

predicted_label = estimate_label(im)

assert(len(predicted_label) == 3), "The predicted_label is not the expected length (3)."

# Compare true and predicted labels

if(predicted_label != true_label):

# If these labels are not equal, the image has been misclassified

misclassified_images_labels.append((im, predicted_label, true_label))

# Return the list of misclassified [image, predicted_label, true_label] values

return misclassified_images_labels

# Find all misclassified images in a given test set

MISCLASSIFIED = get_misclassified_images(STANDARDIZED_TEST_LIST)

# Accuracy calculations

total = len(STANDARDIZED_TEST_LIST)

num_correct = total - len(MISCLASSIFIED)

accuracy = num_correct/total

print('Accuracy: ' + str(accuracy))

print("Number of misclassified images = " + str(len(MISCLASSIFIED)) +' out of '+ str(total))

Visualize the misclassified images¶

Visualize some of the images you classified wrong (in the MISCLASSIFIED list) and note any qualities that make them difficult to classify. This will help you identify any weaknesses in your classification algorithm.

# Visualize misclassified example(s)

## TODO: Display an image in the `MISCLASSIFIED` list

## TODO: Print out its predicted label - to see what the image *was* incorrectly classified as

num = 1

misclassified_image = MISCLASSIFIED[num][0]

misclassified_label = MISCLASSIFIED[num][1]

true_label = MISCLASSIFIED[num][2]

print('Actual label: {}'.format(list(true_label)))

print('Predicted label: {}'.format(list(misclassified_label)))

plt.imshow(misclassified_image)

(Question 2): After visualizing these misclassifications, what weaknesses do you think your classification algorithm has? Please note at least two.¶

Answer:

- Some images are extremely washed out, its difficult to distinguish the colors.

- Some images have misleading mixtures of colors. In one of green signals, red spread was more.

Mosty green signals ended up misclassified. Check two images below, and my masks' outcome. The original images (top left, titled with ID) are totally washed out and colors indistinguishable.

Tweaking masks and extraction further could help. But that may also affect past performance.

Test if you classify any red lights as green¶

To pass this project, you must not classify any red lights as green! Classifying red lights as green would cause a car to drive through a red traffic light, so this red-as-green error is very dangerous in the real world.

The code below lets you test to see if you've misclassified any red lights as green in the test set. This test assumes that MISCLASSIFIED is a list of tuples with the order: [misclassified_image, predicted_label, true_label].

Note: this is not an all encompassing test, but its a good indicator that, if you pass, you are on the right track! This iterates through your list of misclassified examples and checks to see if any red traffic lights have been mistakenly labelled [0, 1, 0] (green).

# Importing the tests

import test_functions

tests = test_functions.Tests()

if(len(MISCLASSIFIED) > 0):

# Test code for one_hot_encode function

tests.test_red_as_green(MISCLASSIFIED)

else:

print("MISCLASSIFIED may not have been populated with images.")

5. Improve your algorithm!¶

Submit your project after you have completed all implementations, answered all questions, AND when you've met the two criteria:

- Greater than 90% accuracy classification

- No red lights classified as green

If you did not meet these requirements (which is common on the first attempt!), revisit your algorithm and tweak it to improve light recognition -- this could mean changing the brightness feature, performing some background subtraction, or adding another feature!

Going Further (Optional Challenges)¶

If you found this challenge easy, I suggest you go above and beyond! Here are a couple optional (meaning you do not need to implement these to submit and pass the project) suggestions:

- (Optional) Aim for >95% classification accuracy.

- (Optional) Some lights are in the shape of arrows; further classify the lights as round or arrow-shaped.

- (Optional) Add another feature and aim for as close to 100% accuracy as you can get!